AI performance optimization is the process of enhancing artificial intelligence models to run faster, use fewer resources, and deliver better results without sacrificing accuracy. The goal is to improve model efficiency, speed, resource management, and cost-effectiveness while maintaining or improving prediction quality.

An AI system might be incredibly smart, but if it’s slow or expensive to run, it isn’t delivering real business value. Models like OpenAI’s GPT-3, with over 175 billion parameters, require enormous computational resources. According to McKinsey & Company, businesses that effectively implement AI can potentially double their cash flow by 2030, but only if their systems are optimized for practical use.

Modern AI models have grown dramatically in complexity, leading to:

- Skyrocketing infrastructure costs

- Slow response times that limit real-time applications

- High energy consumption

- Deployment limitations on edge devices or mobile apps

AI model optimization addresses these problems directly. It’s the bridge between having a powerful AI and having a practical AI that drives business results by balancing performance, reliability, and efficiency.

The “Why” and “What”: Goals of AI Performance Optimization

The goals of AI optimization fall into two main categories: enhancing operational efficiency and boosting model effectiveness.

Enhancing Operational Efficiency

Operational efficiency means getting more done with less. An efficient AI saves money, delivers faster responses, and reduces IT strain.

- Reduced latency: Real-time applications like fraud detection or customer service chatbots need to respond in milliseconds. Optimization makes this possible.

- Lower computational costs: Smart optimization can significantly cut computing resource usage. E-commerce companies have cut usage by 40%, turning expensive AI experiments into profitable tools.

- Better scalability: An optimized model can handle more users and data on less powerful hardware, opening up possibilities for mobile or edge computing.

- Energy reduction: Lower energy consumption means reduced infrastructure costs and overhead.

Want to see how optimization could impact your specific situation? Check out our AI Impact Calculator to get a clearer picture of potential savings.

Boosting Model Effectiveness

Efficiency is important, but a fast AI that gives wrong answers is useless. Effectiveness is what truly matters.

- Improved accuracy: In critical applications like healthcare diagnostics or financial decisions, improving accuracy from 85% to 98% can be life-changing.

- Better generalization: A well-optimized model learns patterns that apply to new, unseen data, which is crucial for handling real-world unpredictability.

- Avoiding model drift: Optimization helps keep your AI relevant over time as new data comes in, preventing its accuracy from degrading.

- Preventing overfitting and underfitting: This involves finding the sweet spot where a model is neither too simple (underfitting) nor too complex (overfitting), ensuring it can solve new problems.

- Robustness against new data: Your AI must adapt to changing markets, customer behaviors, and new trends rather than breaking down.

As GeeksforGeeks explains, AI optimization is about enhancing performance across multiple dimensions at once. The best strategies improve both efficiency and effectiveness, giving you AI systems that are fast, accurate, cost-effective, and reliable.

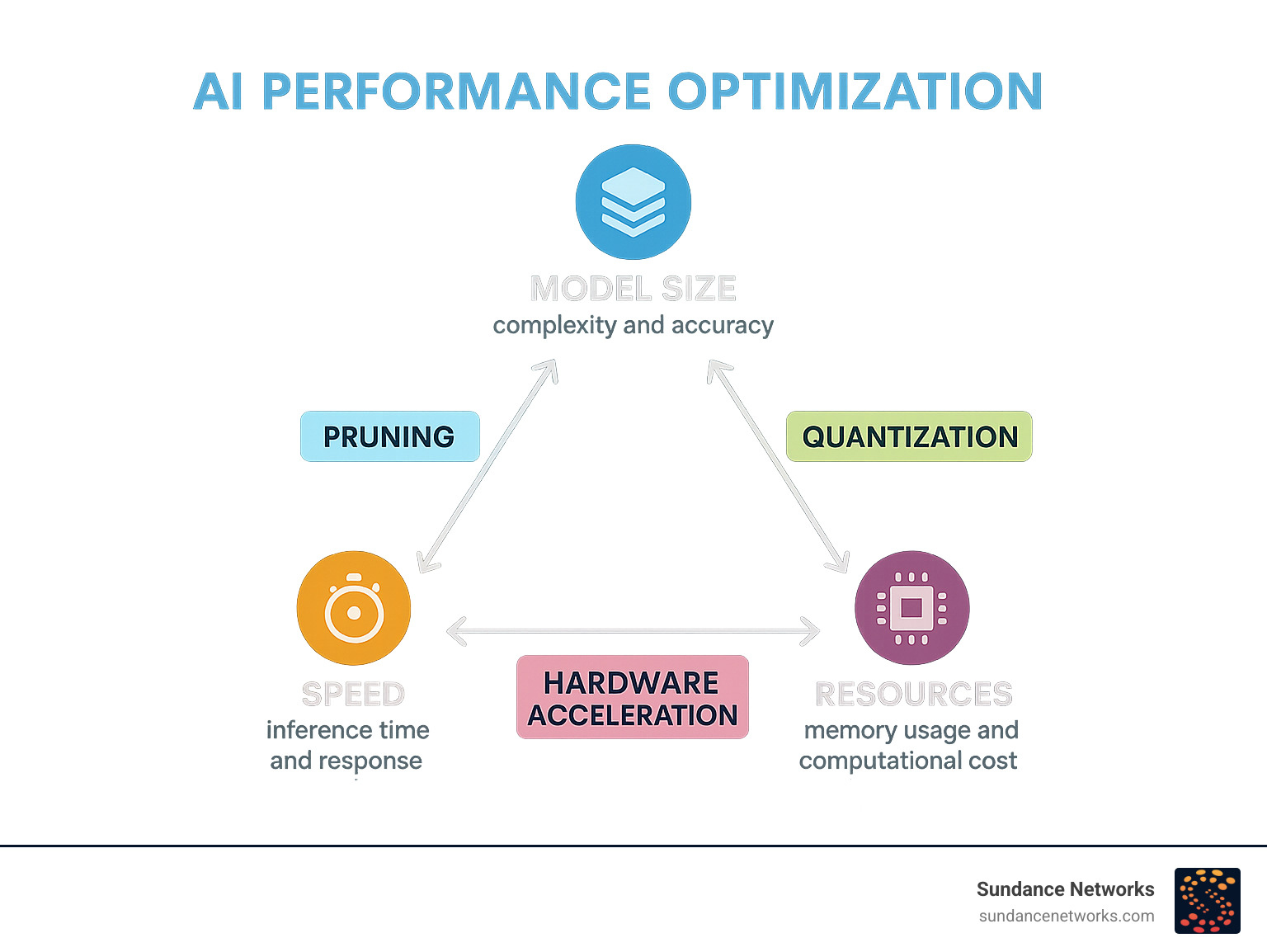

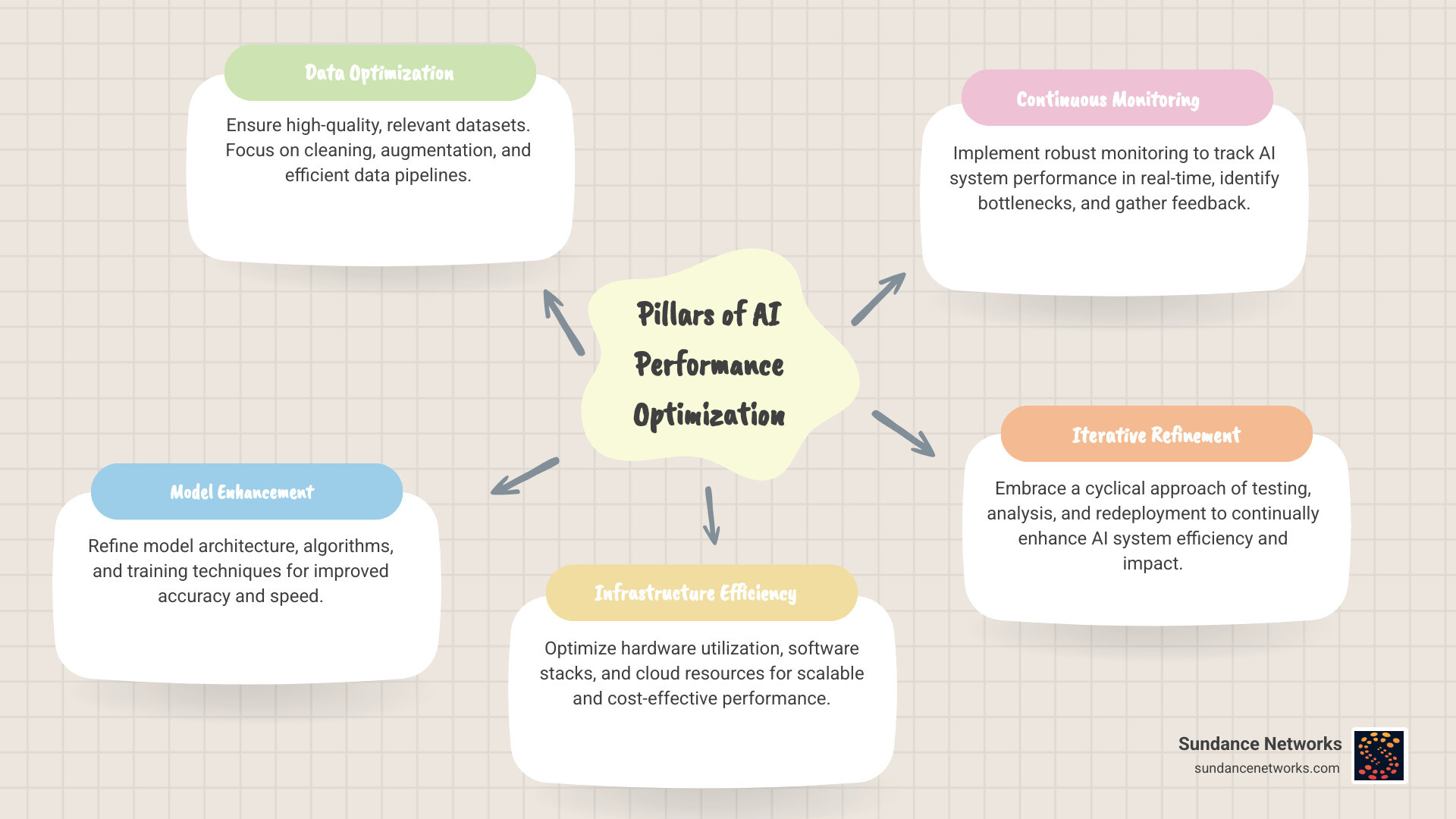

A Three-Pronged Approach: Key Optimization Strategies

Successful performance optimization requires a complete approach that addresses every part of the system. At Sundance, we use a three-pronged strategy focusing on data, the model, and the underlying infrastructure. These pillars work together within the MLOps lifecycle to create a continuous improvement cycle.

Data-Centric AI Performance Optimization

An AI model is only as good as its data. This is the classic “garbage in, garbage out” principle.

- Data quality: We begin by cleaning datasets, fixing errors, filling in missing values, and identifying biases. As V7 Labs points out, high-quality data preprocessing is critical for AI success.

- Feature engineering: This involves selecting the most relevant information from raw data and creating new features to help the model better understand patterns.

- Data augmentation: We create variations of existing data (e.g., rotating images, rephrasing text) to give the model more examples to learn from without collecting new datasets.

- Data set distillation: This advanced technique condenses massive datasets into smaller, concentrated versions that retain essential patterns. As ComputerWeekly explains, this makes future training cycles much more efficient.

Model-Centric AI Performance Optimization

This pillar focuses on making the AI algorithms themselves smarter and more efficient.

- Model architecture and algorithm selection: Choosing the right neural network or machine learning approach for a specific problem is a critical first step.

- Pruning: This technique removes unnecessary connections in a neural network, creating a leaner model that runs faster without losing accuracy.

- Quantization and Knowledge Distillation: Quantization reduces the numerical precision of a model’s parameters (e.g., from 32-bit to 8-bit), shrinking its size and speeding up calculations. Knowledge distillation trains a smaller “student” model to mimic a larger “teacher” model.

- Regularization and Hyperparameter Tuning: Regularization techniques (like L1/L2 and dropout) prevent the model from overfitting to its training data. Hyperparameter tuning fine-tunes settings like learning rate and batch size for optimal performance.

The team at Index.dev has an excellent guide on how these techniques work together.

Infrastructure-Centric Optimization

The third pillar focuses on the environment where the AI runs.

- Hardware acceleration: Specialized processors like GPUs, TPUs, and FPGAs are designed for the mathematical operations that power machine learning and can transform performance.

- Deployment modifications: Optimizing where and how a model runs—whether moving from cloud to edge or choosing between on-premise and cloud solutions—can dramatically reduce latency.

- Source code improvements: Using more efficient libraries (like Intel’s oneDNN, which can improve performance by 3-8x), optimizing data structures, and implementing parallel computing can open up significant gains.

In the Weeds: Core Techniques for Model Improvement

Let’s explore the specific techniques that make AI performance optimization effective. These methods involve clever trade-offs, teaching an AI model to be more efficient without losing its intelligence.

Slimming Down Your Model: Pruning and Quantization

Model pruning and quantization are two key techniques for making neural networks smaller and faster.

Model pruning identifies and removes neural network connections that don’t significantly contribute to the model’s decisions. Scientific research on model pruning shows many networks are “over-parameterized,” with far more connections than needed. It’s possible to remove 70-90% of a model’s parameters with little to no impact on accuracy, resulting in a faster model that uses less memory.

Quantization makes the remaining parts of the model more efficient by reducing the numerical precision of its weights. Most models are trained with 32-bit floating-point numbers, but this precision often isn’t needed for making predictions. Quantization converts these to lower-precision formats, like 8-bit integers. This change alone can reduce model size by 75% or more.

For example, Google used quantization on its BERT model to achieve a 4x reduction in memory usage without sacrificing accuracy. One financial institution reduced its fraud detection model’s inference time by 73% using these techniques. Pruning and quantization work well together to create smaller, faster, and still intelligent models.

Teaching Your Model to Generalize: Regularization and Hyperparameter Tuning

Overfitting occurs when a model performs well on training data but fails on new, real-world information. Regularization and hyperparameter tuning help prevent this.

- Regularization techniques like L1/L2 regularization and dropout prevent models from memorizing training data by discouraging overly complex solutions and forcing the network to develop more robust pathways.

- Early stopping monitors performance on a separate validation dataset during training and stops the process if performance begins to decline, preventing over-training.

- Hyperparameter tuning involves finding the optimal “settings” that control how a model learns, such as learning rate and batch size. As explained by GeeksforGeeks, small adjustments can dramatically improve accuracy and efficiency.

Our custom IT consulting and system integration services often focus on this fine-tuning to ensure both precision and practical performance.

Leveraging Hardware and Code Improvements

Sometimes the biggest gains come from improving the environment where the model runs.

- Hardware acceleration uses specialized processors. GPUs excel at parallel processing, while TPUs are custom-designed by Google for even greater ML efficiency. Google’s BERT optimization saw a 7x increase in inference speed on TPUs. FPGAs are reconfigurable chips that offer high performance and energy efficiency. Our hardware solutions help businesses choose the right approach.

- Source code improvements can yield surprising gains. Using efficient libraries, parallel computing, and optimized data structures can improve performance without changing the model. For instance, Intel’s oneDNN library can deliver 3-8x performance improvements by optimizing underlying code on Intel hardware.

- Deployment modifications, like moving a model to more powerful hardware or implementing edge computing for lower latency, can also provide immediate benefits.

Real-World Wins, Common Problems, and the Future

When AI performance optimization is applied to business problems, it creates tangible value. Let’s s see how this plays out in the real world, the challenges involved, and what’s next.

Optimization in Action: Industry Case Studies

Optimized AI is changing operations across many industries:

- Finance: A major bank reduced its fraud detection model’s response time by 73%, enabling real-time transaction analysis while reducing false positives.

- Healthcare: Optimized models help analyze patient data for personalized treatment recommendations, delivering critical insights in seconds.

- Retail: E-commerce platforms use optimized recommendation engines that require 40% fewer computing resources, lowering infrastructure costs.

- Autonomous Vehicles: Self-driving cars rely on highly optimized models to process visual data and make split-second decisions with on-board computing power.

- Legal Services: An advisory firm used an optimized model to analyze over 100,000 court rulings for relevant precedents in under a minute.

At Sundance, our cyber security solutions leverage optimized AI to identify and respond to threats faster than ever before.

Overcoming Common Optimization Challenges

The path to successful optimization has several key challenges:

- Balancing performance and accuracy: Pushing too hard for model compression can sacrifice the accuracy that makes the AI valuable.

- Resource constraints: Some optimization techniques, like hyperparameter tuning, can be computationally expensive themselves.

- Data quality and availability: Sophisticated optimization cannot fix problems caused by poor or insufficient training data.

- Integration with existing systems: An optimized model is useless if it can’t communicate with your current infrastructure. Our custom IT consulting and system integration experience is invaluable here.

- Keeping pace with evolving data: Models can suffer from “drift” as real-world data changes, requiring ongoing monitoring and maintenance.

The Future: Autonomous Optimization

The future of performance optimization lies in AI systems that can optimize themselves. While AI is not yet ready for fully autonomous optimization of massive software systems—current LLMs face context window limitations—progress is rapid.

We are seeing exciting developments in automated machine learning (AutoML) tools that handle hyperparameter tuning and model selection with minimal human input. Self-optimizing systems that monitor their own performance and trigger retraining are also emerging.

The integration of AI-driven continuous integration and deployment (CI/CD) for machine learning is becoming standard, ensuring models in production remain optimal. While AI won’t replace human experts soon, it is becoming a powerful assistant, allowing our team at Sundance Networks to focus on strategic challenges while AI handles repetitive optimization tasks.

Frequently Asked Questions about AI Model Optimization

What is the difference between model pruning and quantization?

Model pruning reduces a model’s complexity by removing redundant components, like unnecessary weights and neurons. This makes the model’s structure leaner.

Quantization reduces the numerical precision of the model’s remaining parameters, for example, by converting 32-bit numbers to 8-bit integers. This shrinks the model’s size.

These techniques are often used together. You can first prune the unnecessary parts and then quantize what’s left to achieve maximum compression and speed.

How do you measure the success of AI optimization?

Success is measured by a combination of technical metrics and business outcomes. We track:

- Technical Metrics: Key indicators include inference latency (response time), throughput (predictions per second), model size, and power consumption.

- Model Performance: We ensure that core metrics like accuracy, precision, recall, and F1-score are maintained or improved. There is often a trade-off between efficiency and accuracy that must be carefully managed.

- Business KPIs: Success is defined by real-world impact. This includes reduced operational costs, faster decision-making, improved customer satisfaction, and other goals specific to your business.

At Sundance Networks, we align these metrics with your business goals to ensure optimization delivers genuine value.

Can optimization introduce bias into an AI model?

Yes, this is a critical risk that must be managed carefully. Bias can be introduced or amplified if optimization is not handled correctly.

For example, data distillation or pruning could inadvertently remove data points or features that are crucial for making fair predictions about underrepresented groups or edge cases. This could result in a model that is faster but less equitable.

To prevent this, we incorporate continuous monitoring, thorough testing on diverse datasets, and regular fairness audits throughout the optimization process. As Lorca discusses, the ethics of using AI is a complex but essential consideration. An AI model isn’t truly optimized unless it’s both efficient and fair.

Open up Your AI’s (and Your Businesses’) True Potential

An AI system might be brilliant, but if it’s slow and expensive, it’s not living up to its promise. AI performance optimization transforms impressive technology into a practical, profitable business asset.

Optimization delivers tangible benefits. Operational efficiency means your AI runs faster on fewer resources, cutting costs. Model effectiveness ensures your AI makes reliable decisions that improve over time. These aren’t just technical tweaks; they translate directly to better business outcomes.

By following a three-pronged approach—focusing on data, models, and infrastructure—you can maximize the performance of your AI investment. Techniques like pruning, quantization, and regularization, combined with hardware acceleration, are proven methods for creating systems that are both smart and practical.

Real-world examples show the impact: banks detecting fraud in real-time, retailers cutting computing costs by 40%, and legal firms analyzing documents in minutes. While challenges like balancing performance with accuracy exist, they are manageable parts of the journey.

Looking ahead, automated machine learning (AutoML) and self-optimizing systems are making optimization more accessible, freeing up human experts to focus on strategy and innovation.

At Sundance, we’ve seen how the right optimization strategy can transform a business. We help you build technology solutions that grow with your business, protect your data, and serve your community.

Your AI has incredible potential. Don’t let it sit idle when it could be driving real results. Get started with our AI Solutions and find what optimized AI can do for your business.